The secret to boosting your AI adoption? A culture of experimentation

Table of contents

Subscribe via Email

Subscribe to our blog to get insights sent directly to your inbox.

In a world racing toward AI maturity, most companies are still stuck at the starting line. This is not for lack of interest or tools, but because traditional processes demand certainty and control where only exploration and learning are effective when it comes to AI adoption.

At Modus Create, we recently ran a structured AI tooling experiment to test what happens when you give product development teams the freedom to work differently. We assigned two teams to build the same product, with an identical scope. One team was equipped to use AI-assisted coding tools while the other used traditional software development methodologies.

The AI-assisted team had 30% fewer people and still delivered the same scope 60% faster. While the results were impressive, what mattered more than speed was how we got there.

Because we didn’t start with a polished business case. We started with a problem worth solving.

What can business and technology leaders learn from this experiment? Here are a few essential takeaways.

Start with the problem you’re solving, and the ROI will follow

If your product development team doesn’t clearly understand what problem they’re trying to solve, they’ll either build the wrong thing faster or never start at all.

For us, the opportunity was clear: AI-powered code generation is evolving rapidly, and we saw a chance to evolve with it.

While our traditional product delivery model continues to serve clients well, we wanted to explore whether AI adoption could help us meet growing expectations for speed and efficiency, without compromising the quality we expect and our clients rely on.

So we asked: Can AI-assisted coding reduce time and cost without sacrificing quality?

From there, we didn’t rely on vague aspirations or abstract goals. Instead, we defined clear, testable hypotheses, such as:

- A two-person AI-augmented team can deliver the same scope as a three-person traditional team

- Time spent on infrastructure tasks will decrease by 40%

- Engineers can be productive in unfamiliar tech stacks with support from AI tools

Each of these could be answered with a simple true or false. A lack of ambiguity kept the team aligned and the experiment grounded. We weren’t pitching a vision. We were testing reality. This approach builds confidence because it replaces assumptions with evidence and turns emerging technology into something practical and provable.

Foundation principles for a culture of experimentation

Before jumping into structure, let’s ground our AI experiment in the principles that shaped it:

Validated learning from The Lean Startupby Eric Ries: Rather than measuring success by output, we measured it by what we learned. One of our most important deliverables was a living handbook that captured how our product engineers used AI coding agents effectively. That’s validated learning: knowledge as a deliverable. This handbook is actively being used to spread the knowledge around the entire engineering team at Modus Create.

Fail first, fail early from Design Thinkingby the Interaction Design Foundation: We deliberately tackled the riskiest parts of the AI experiment first. Prompt engineering, hallucination control, and output quality were the “dragons at the gate.” If those failed, the rest didn’t matter. So we hit them early, iterated fast, and reduced uncertainty upfront.

Measure what mattersfrom Measure what matters by John Doerr: Each hypothesis was shaped as an OKR or a clear boolean. We avoided a “try this and see” approach. This made the team more aligned.

Doing agile right from the Manifesto for Agile Software Development: During the experiment, we kept communication tight, showed progress through working software, and adapted based on new data. No long spec docs. Just demos and meaningful discussions. We didn’t force a rigid plan. We let real-time learning shape our direction.

Rather than measuring success by output, we measured it by what we learned. One of our most important deliverables was a living handbook that captured how our engineers used AI coding agents effectively.

Running AI experiments: Structure over hope

When running our AI tooling experiment, we set strict boundaries:

- Each hypothesis had a validation method with a binary outcome

- Time spent on tasks was tracked via timesheets

- Code quality was assessed using SonarQube

- Work was done in sandbox environments with fake data

- Engineers followed strict communication and time-tracking protocols

- The work was timeboxed, not estimated

This wasn’t improvisation. It was a disciplined iteration. The goal wasn’t to ship a product. It was to understand how AI tools could impact product delivery models, team composition, and client outcomes.

Learning is the output of the experiment

If you’re asking your team to prove ROI before they experiment, you’re not experimenting. You’re executing. When driving AI adoption, learning is a significant part of ROI.

Our AI-augmented engineering team documented what worked and what didn’t. They employed various prompting strategies to manage complex logic. They built automatic validation processes to auto-correct AI code. They found out that Figma-to-UI translation worked well with coding agents, but required skilled prompt engineering.

None of these insights showed up in the first week. Or the second. However, by week four, they had become key productivity drivers. The team wasn’t just applying AI. They were learning how to collaborate with it faster and more effectively than any doc or tutorial could teach them. They learned the practice, not theory.

That learning was the outcome. And it’s now embedded into how we scale AI-assisted coding in client projects.

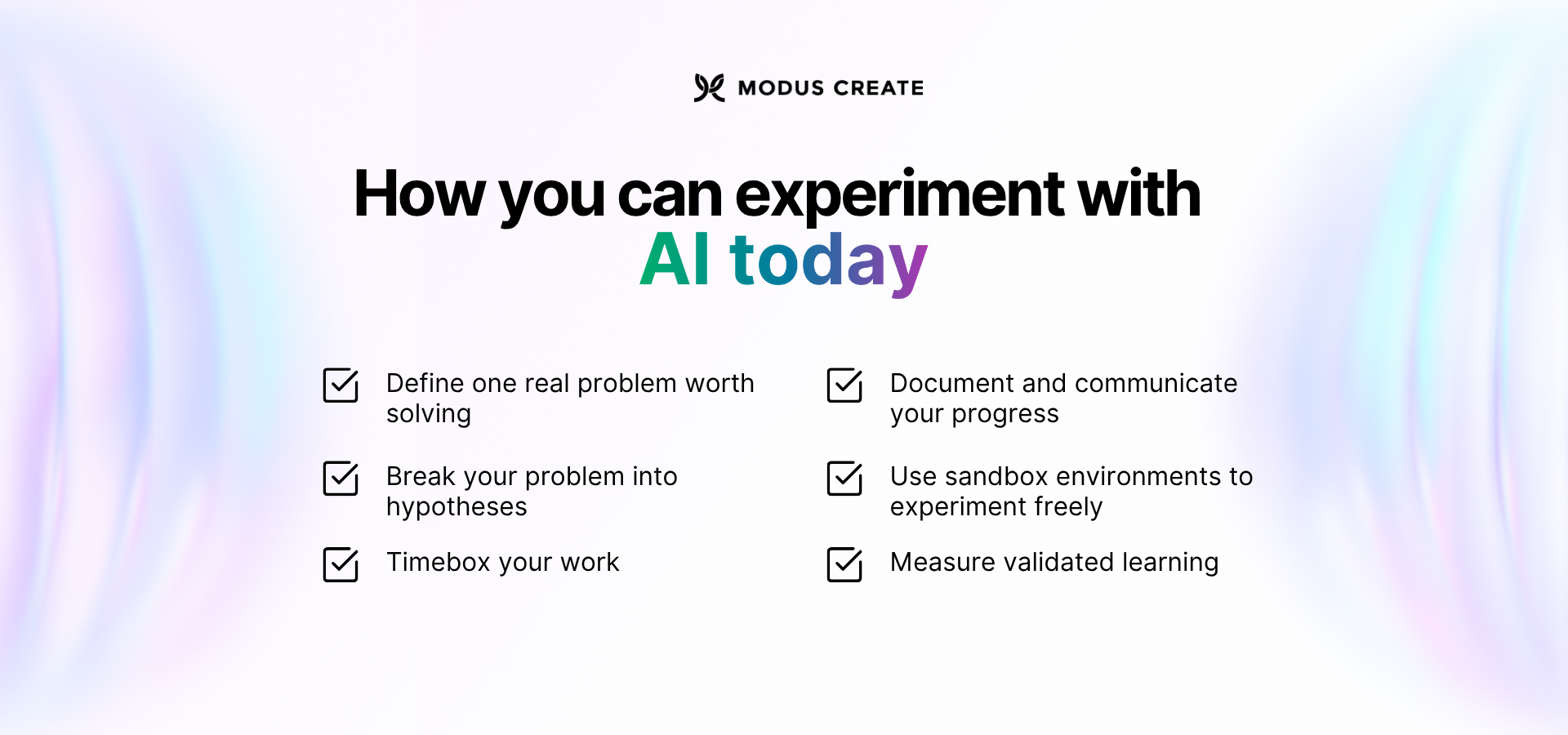

How you can experiment with AI today

You don’t need a full lab to start experimenting with AI. You just need a few rules:

Define one real problem worth solving. Not a side use case, not a “someday” wishlist item. A real friction point that, if solved, changes your trajectory and has a real impact on your business.

Break your problem into hypotheses. Use the First Principles method and start by stripping the problem down to its fundamentals. Ask “What’s actually true about this situation?” Then build your hypothesis from the ground up. A good hypothesis is written in numeric terms. Hypotheses should be brainstormed and defined by the team.

Timebox your work. Don’t estimate. Commit to a timeframe (e.g., 2 or 4 weeks), and use that constraint to force decisions. The goal isn’t perfection. It’s discovery. This protects the team’s time, builds a feedback loop, and gives you a concrete window to reflect on outcomes. Then iterate as you need.

Document and communicate your progress. Set a rhythm: one doc, one demo, one discussion. Write down what you’re testing, what you’re learning, and what you’re changing. Meet regularly to share updates with the team and stakeholders. Use demos to show progress early, not just results. This keeps everyone aligned and opens the door for course correction. If the data shows you’re heading in the wrong direction, turn. That’s the point of the experiment.

Use sandbox environments to experiment freely. No real data. No risk to production. People should feel engaged and not afraid of the impact of a failing experiment. That psychological safety is what keeps creativity alive and drives momentum forward.

Measure validated learning. If you didn’t get a clear result, change the setup. Not every experiment needs to succeed. Failing is the way we learn what’s not working.

You don’t scale AI just through procurement. You can scale it through a culture of curiosity, fearless learning, practical applicability, and shared knowledge.

Stop treating product development experiments like projects

If you need a 12-slide ROI deck and six approval gates before a team can run an internal learning experiment, you’re falling behind. AI experimentation doesn’t reward perfection. It rewards risk-taking, validated learning, and iterations.

Your role as a leader isn’t to have all the answers. It’s to create space for your teams to ask better questions and learn quickly from mistakes.

You don’t scale AI adoption just through procurement. You can scale it through a culture of curiosity, fearless learning, practical applicability, and shared knowledge.

Start small. Structure tightly. Measure what you learn. Iterate relentlessly. That’s how you build AI fluency before your competitors do.

Structured experimentation is your strategy. When things move too fast for long-term certainty, your most reliable path is rapid, validated learning. AI experiments aren’t a distraction from execution. They’re how you find the next best thing to execute.

In the game of AI maturity, the fastest learners win.

<b>Wesley Fuchter</b> is the Director of Software Engineering at Modus Create. He has over 13 years of experience building cloud-native applications for web and mobile. As a tech leader, he spends most of his time working closely with engineering, product, and design to solve customers' business problems. His experience sits at the intersection of hands-on coding, innovation, and people management with a mix of experiences going from AWS, Java and TypeScript to startups, agile and lean practices.

Related Posts

Discover more insights from our blog.